UK, France crack down on tech giants for terrorist content

Add Axios as your preferred source to

see more of our stories on Google.

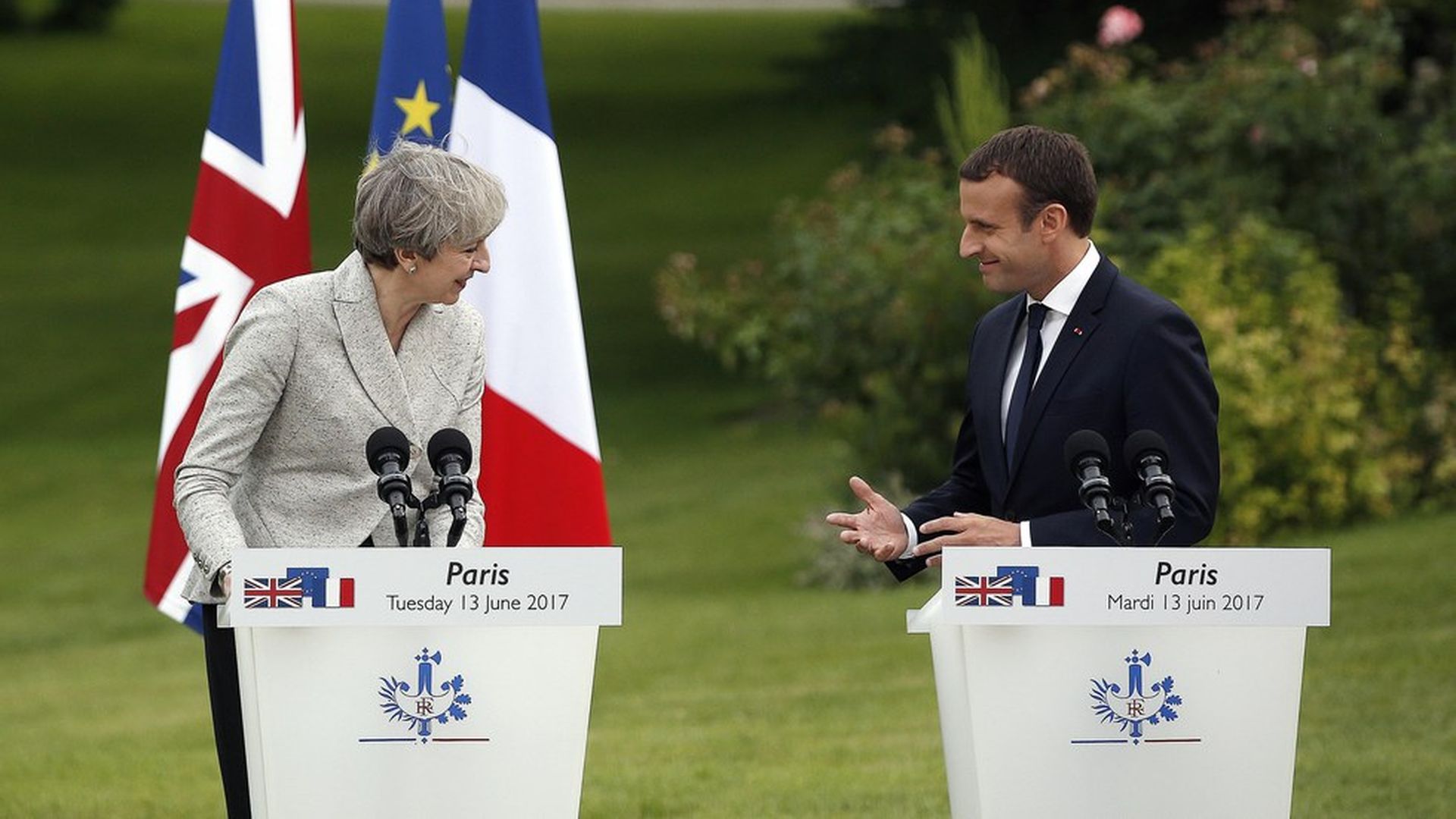

AP Photo/Thibault Camus

British PM Theresa May and French President Emmanuel Macron announced a joint campaign that targets terrorist propaganda on social media, the Guardian reports. The campaign proposes putting legal liability on companies like Google and Facebook for failing to control the presence of jihadist content on their platforms, which could result in fines.

Why it matters: European culture and policies tend to favor privacy and security more than those in the U.S. As a result, Google and Facebook have come under increasing pressure in Europe to censor and take action against violent content and hate speech on their platforms. And a recent evaluation found that companies are removing an increasing share of flagged hate speech, a year after major platforms agreed to follow a voluntary code of conduct in the European Union.

Why now: The agreement comes on the heels of terrorist attacks in Manchester, London and Paris, which have prompted European officials to take a bigger stand against leading internet companies for their roles in distributing terrorist propaganda.

Get smart fast: Increased use of social media by extremists will continue to force regulators and societies to more closely examine a fundamental question: Is there a point at which these platforms are viewed as playing an important enough part of critical infrastructure (national security, elections, etc.) that they require more significant regulation? For the U.K. and France, that point seems to be now. The tech companies have traditionally been wary of the idea they should be responsible for the user content hosted on their platforms.

What they're saying: In the wake of the London terrorist attack, Facebook's Director of Policy Simon Milner told Reuters that Facebook wants its platform to be a "hostile environment" for terrorists. Facebook also announced a few weeks ago that it's adding an additional 3,000 human editors on top of its existing 4,500 to help quickly monitor and remove inappropriate content. Google announced Tuesday a new two-hour removal policy to ensure nefarious content is taken down quickly once it is reported.

Some say it's not enough: "These companies (Google and Facebook) make it a point to celebrate 'moonshots' that require extraordinary vision, resources and engineering prowess," says Jason Kint, CEO of the trade association for premium publishers, Digital Content Next. "We believe they should pursue cleaning-up the garbage littering the digital media ecosystem with the same excitement, investment and vigilance."

Both companies state in their standards that hateful or terrorist content is unacceptable. A Facebook spokesperson tells Axios, "We want to provide a service where people feel safe. That means we do not allow groups or people that engage in terrorist activity, or posts that express support for terrorism." A YouTube spokesperson tells Axios, "We are working urgently to further improve how we deal with content that violates our policies and the law. These are complicated and challenging problems, but we are committed to doing better and being part of a lasting solution."