Axios Science

January 11, 2024

Welcome back to Axios Science. This edition is 1,540 words, about a 6-minute read.

- Send your feedback and ideas to me at [email protected].

- Sign up here to receive this newsletter.

🏔️ If you'll be in Davos this year, join me and my Axios colleagues for a week of events featuring conversations with OpenAI CEO Sam Altman, Google DeepMind COO Lila Ibrahim and others. RSVP to join us here.

1 big thing: It can be hard for AI to forget

Illustration: Aïda Amer/Axios

Users want answers from artificial intelligence, but as the technology moves into daily life and raises legal and ethical concerns, sometimes they also want AI to forget things, too. Researchers are working on ways to make that possible — and finding machine unlearning is a puzzling problem.

Why it matters: Copyright laws and privacy regulations that give people the "right to be forgotten," along with concerns about AI that is biased or generates toxic outputs, are driving interest in techniques that can remove traces of data from algorithms without interfering with the model's performance.

Deleting information from computer storage is a straightforward process, but today's AI doesn't copy information into memory — it trains neural networks to recognize and then reproduce relationships among bits of data.

- "Unlearning isn't as straightforward as learning," Microsoft researchers recently wrote. It's like "trying to remove specific ingredients from a baked cake — it seems nearly impossible."

How it works: Machine learning algorithms are trained on a variety of data from different sources in a time-consuming and expensive process.

- One obvious way to remove the influence of a specific piece of data — because it is incorrect, biased, protected, dangerous or sensitive in some other way — is to take it out of the training data and then retrain the model.

- But the high cost of computation means that is basically a "non-starter," says Seth Neel, a computer scientist and professor at Harvard Business School.

Driving the news: A machine unlearning competition that wrapped up in December asked participants to remove some facial images used to train an AI model that can predict someone's age from an image.

- About 1,200 teams entered the challenge, devising and submitting new unlearning algorithms, says co-organizer Peter Triantafillou, a professor of data science at the University of Warwick. The work will be described in a future paper.

What's happening: Researchers are trying a variety of approaches to machine unlearning.

- One involves splitting up the original training dataset for an AI model and using each subset of data to train many smaller models that are then aggregated to form a final model. If some data then needs to be removed, only one of the smaller models has to be retrained. That can work for simpler models but may hurt the performance of larger ones.

- Another technique involves tweaking the neural network to de-emphasize the data that's supposed to be "forgotten" and amplify the rest of the data that remains.

- Other researchers are trying to determine where specific information is stored in a model and then edit the model to remove it.

Yes, but: "Here's the problem: Facts don't exist in a localized or atomized manner inside of a model," says Zachary Lipton, a machine learning researcher and professor at Carnegie Mellon University. "It isn't a repository where all the facts are cataloged."

- And a part of a model involved in knowing about one thing is also involved in knowing about other things.

2. Part II: Where it stands

There is a "tug of war between the ability of the network to keep working correctly on the data that it has been trained on and has remained, and basically forgetting the data that people want to forget," Triantafillou says.

- He and his colleagues presented a paper at a top AI conference in December that detailed an algorithm for several unlearning needs, including removing bias, correcting data that is mislabeled — purposely or accidentally — and addressing privacy concerns.

Zoom in: There's particular interest in unlearning for generative language models like those that power ChatGPT and other AI tools.

- Microsoft researchers recently reported being able to make Llama 2, a model trained by Meta, forget what it knows about the world of Harry Potter.

- But other researchers audited the unlearned model and found that, by rewording the questions they posed, they could get it to show it still "knew" some things about Harry Potter.

Where it stands: The field is "a little messy right now because people don't have good answers to some questions," including how to measure whether something has been removed, says Gautam Kamath, a computer scientist and professor at the University of Waterloo.

- It's a pressing question if companies are going to be held liable for people's requests that their information be deleted or if policymakers are going to mandate unlearning.

Details: From an abstract perspective, what is being unlearned and what it means to unlearn aren't clearly defined, Lipton says.

- But Neel says there is a working definition of "reverting the model to a state that is close to a model that would have resulted without these points." He adds there are reasonable metrics to evaluate these methods, pointing to work by Triantafillou as well as his own research that evaluates whether an adversary attack can tell if certain data points were used to train a network.

- Still, he says, there is "a lot more work to be done": "For simple models, we know how to do unlearning and have rigorous guarantees," but for more complex models, there isn't "consensus on a single best method and there may never be."

What to watch: There are a range of potential applications for unlearning.

- Some settings may not be "super, high-stakes end-of-the-world," Neel says. For example, with copyright concerns, it might be that it is sufficient to stop a model from reproducing something verbatim but still be able to tell what points were used in training.

- It may be a case-by-case scenario involving negotiations between "the party requesting deleting and model owner," he says.

- But others could require complete unlearning of information that poses potentially catastrophic security consequences or serious privacy concerns. Here, Lipton says, there aren't actionable methods and near-term policy mandates should "proceed under the working assumption that (as of yet) mature unlearning technology does not exist."

3. Study: More tiny plastic particles in bottled water than previously thought

Photo: Joel Saget/AFP via Getty Images

Bottled water contains more nanoplastic particles than previously thought, according to new research published in the Proceedings of the National Academy of Sciences on Monday, Axios' Jacob Knutson writes.

Why it matters: The tiny plastic nanoparticles are a growing concern for human health and the environment because of their ubiquity, ability to pass through biological barriers in animals, including humans, and their potentially toxic effects on living organisms.

How it works: Nanoplastics are an extremely small subclass of microplastics, but because of their size, they are potentially more dangerous than larger fragments of plastics.

- The tiny particles, imperceptible to the naked eye, have dimensions ranging from 1 nanometer to 1 micron. (For comparison, human hair is on average about 83 microns wide.)

- They are so small they have been observed penetrating living cells and interfering with the functions of organelles, like mitochondria and lysosomes, potentially contributing to metabolic and functional disorders.

- Nanoplastics appear capable of moving up through the food chain and have been seen crossing the blood-brain barrier in fish, inducing brain damage.

Yes, but: Due to their size, researchers have found it difficult to study nanoplastics.

- This has left major gaps in what we know about nanoplastics, such as how risky they are to human health and how they may function in nature.

By the numbers: In the new study, Columbia University researchers used a new laser-based microscopy technique paired with an algorithm that could identify specific types of plastic and analyzed 25 liters of bottled water from three U.S. brands.

- They found that on average, one liter of water, which is about two standard-size bottled waters, contained 240,000 particles of seven different types of plastic, about 90% of which were nanoplastics.

- Their results were orders of magnitude more than the microplastic abundance reported in previous research on bottled water, likely because those studies considered only larger microplastics.

- The remaining 10% of the observed plastic particles were larger microplastic fragments.

4. Worthy of your time

How CRISPR could yield the next blockbuster crop (Michael Marshall — Nature)

To predict snowfall, NASA planes fly into the storm (Susan Cosier — Scientific American - paywall)

How the largest primate to roam Earth went extinct (Jacob Knutson — Axios)

Scientists scrutinize happiness research (Amber Dance — Knowable)

5. Something wondrous

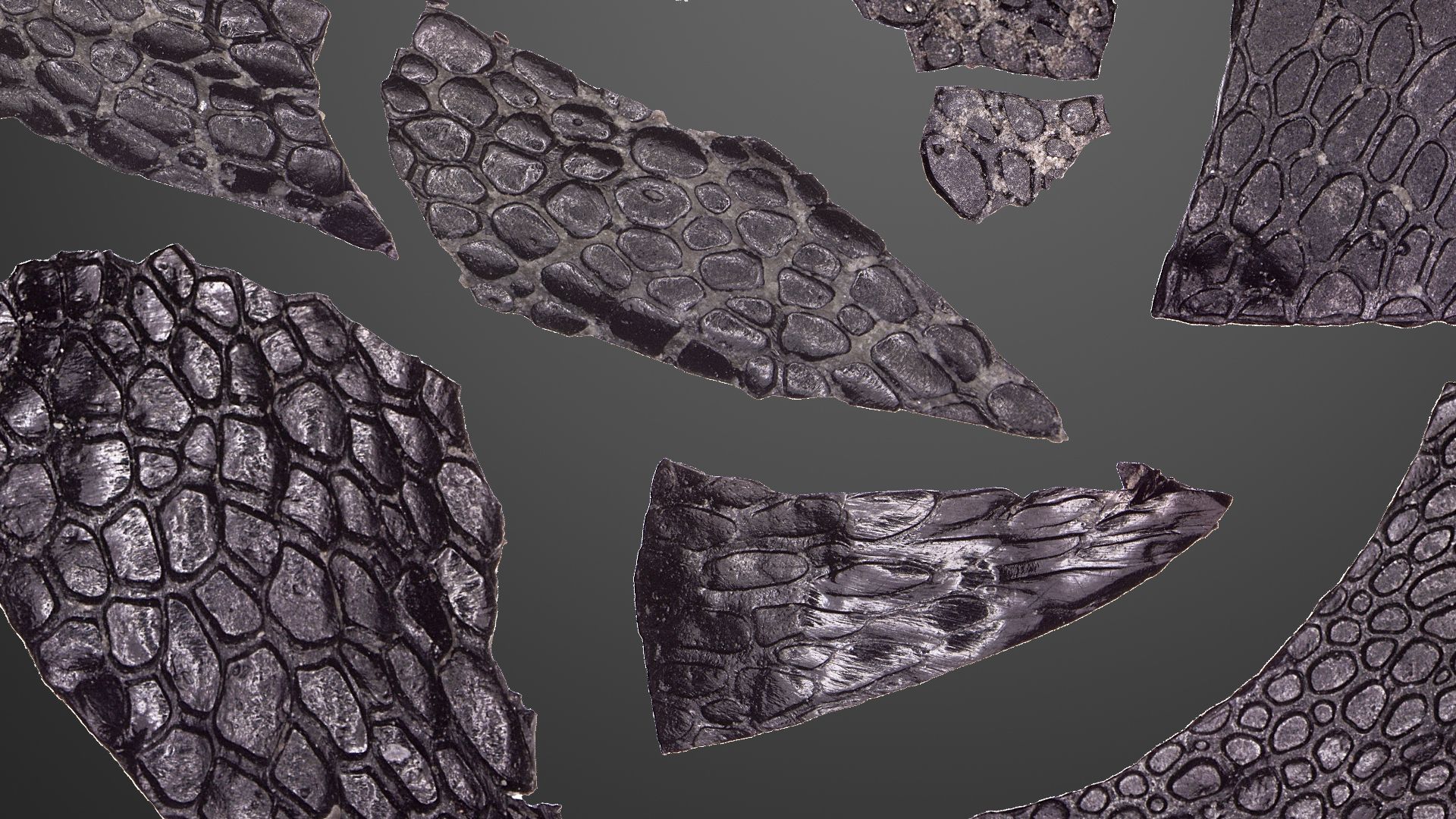

Fossilized skin. Credit: Mooney et al., Current Biology 2024

A 289-million-year-old fossil is the oldest known sample of epidermis, researchers report today.

Why it matters: The rare fossil is believed to be from a reptile that lived before the dinosaurs and could provide clues about how the body's largest organ evolved.

- The epidermis, or outermost layer of skin, in terrestrial reptiles, birds, and mammals was "an important evolutionary adaptation in the transition to life on land," per a press release.

Details: The fossilized skin was discovered in a cave system in Oklahoma, where it was preserved by ideal conditions, including fine clay sediments that slowed the tissue's decomposition and oil seepage that helped to preserve it.

- The surface of the sample, which is smaller than a fingernail, is scaled and pebbled, according to the paper published today in the journal Current Biology.

- The skin's structure may have allowed for the evolution of bird feathers and hair follicles in mammals, the authors write.

The big picture: "Every now and then we get an exceptional opportunity to glimpse back into deep time," study co-author Ethan Mooney, a paleontology graduate student at the University of Toronto, said in a statement.

- "These types of discoveries can really enrich our understanding and perception of these pioneering animals."

Big thanks to managing editor Scott Rosenberg, photo editor Aïda Amer and copy editor Carolyn DiPaolo.

Sign up for Axios Science

Gather the facts on the latest scientific advances