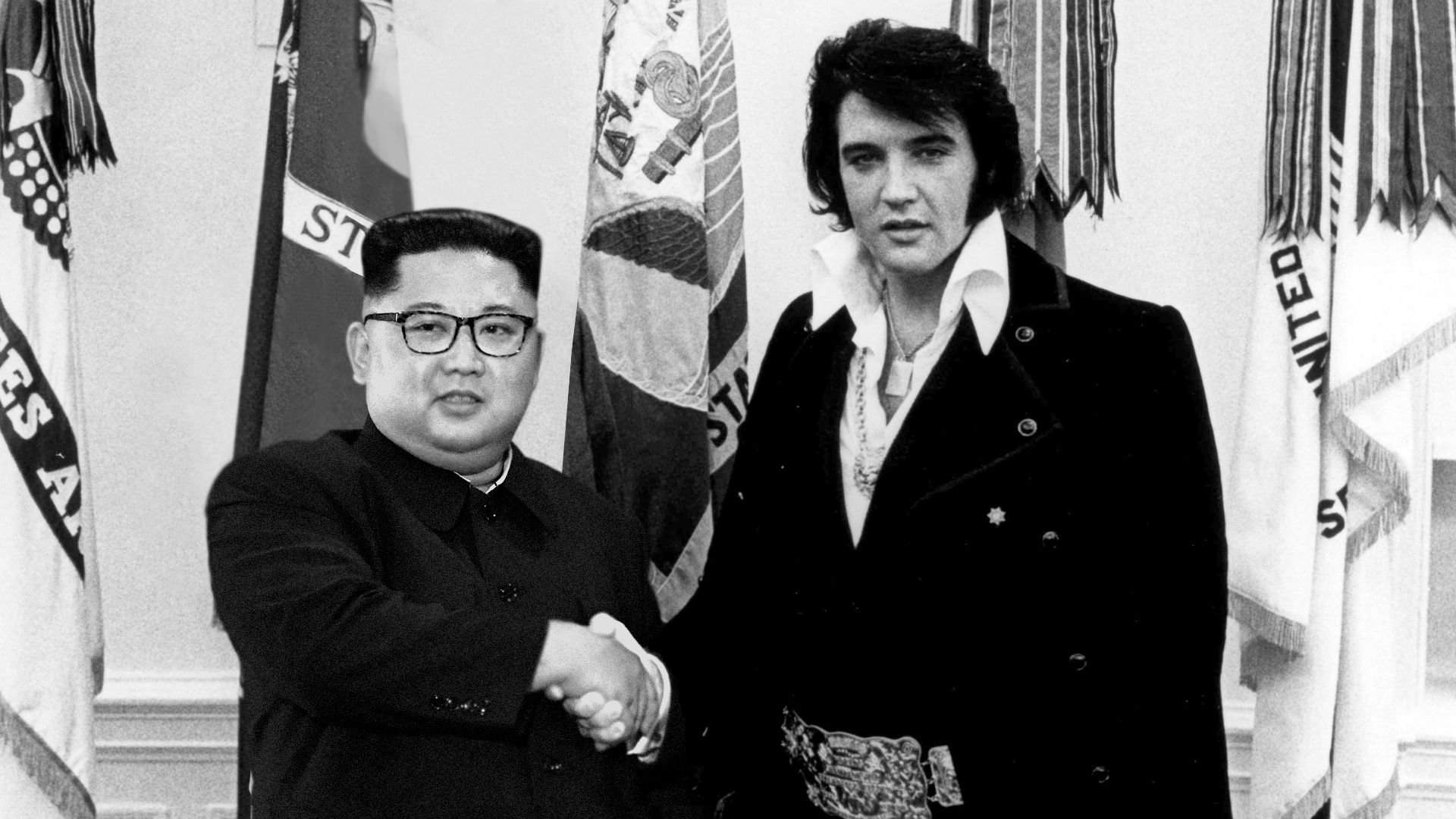

Kim Jong-Un with Elvis. Photoshop: Lazaro Gamio/Axios

Researchers are in a pitched battle against deepfakes, the artificial intelligence algorithms that create convincing fake images, audio and video, but it could take years before they invent a system that can sniff out most or all of them, experts tell Axios.

Why it matters: A fake video of a world leader making an incendiary threat could, if widely believed, set off a trade war — or a conventional one. Just as dangerous is the possibility that deepfake technology spreads to the point that people are unwilling to trust video or audio evidence.

The big picture: Publicly available software makes it easy to create sophisticated fake videos without having to understand the machine learning that powers it. Most software swaps one person’s face onto another’s body, or makes it look like someone is saying something they didn’t.

This has ignited an arms race between fakers and sleuths.

- In one corner are academics developing face-swap tech that could be used for special-effects departments, plus myriad online pranksters and troublemakers. Doctored photos are a stock-in-trade of the internet, but as far as experts know, AI has not yet been used by state actors or political campaigns to produce deepfakes.

- Arrayed against them are other academic researchers, plus private companies and government entities like DARPA and Los Alamos National Labs, all of whom have marshaled resources to try and head off deepfakes.

Facing an uphill fight, the deepfake detectives have approached the problem from numerous angles.

- Gfycat, a gif-hosting platform, banned deepfake porn and uses a pair of tools to take down offending clips. One compares the faces in each frame of a gif to detect anomalies that could give away a fake; the other checks whether a new gif has simply pasted a new face onto a previously uploaded clip.

- Researchers at SUNY Albany created a system that monitors video blinking patterns to determine whether it's genuine.

- Hany Farid, a Dartmouth professor and member of DARPA's media forensics team, favors a physics-based approach that analyzes images for giveaway inconsistencies like incorrect lighting on an AI-generated face. He says non-AI, forensics-based reasoning is easier to explain to humans — like to, say, a jury.

- Los Alamos researchers are creating a neurologically inspired system that searches for invisible tells that photos are AI-generated. They are testing for compressibility, or how much information the image actually contains. Generated images are simpler than real photos, because they reuse visual elements. The repetition is subtle enough to trick the eye, but not a specially trained algorithm.

AI might never catch 100% of fakes, said Juston Moore, a data scientist at Los Alamos. "But even if it’s a cat-and-mouse game," he said, "I think it’s one worth playing."

What’s next: We’re years away from a comprehensive system to battle deepfakes, said Farid. It would require new technological advances as well as answers to thorny policy questions that have already proven extremely difficult to solve.

Assuming the technology is worked out, here is how it could be implemented:

- An independent website that verifies uploaded photos and videos. Verified content could be displayed in a gallery for reference.

- A platform-wide verification system on social-media sites like Twitter, Facebook, and Reddit that checks every user-uploaded item before allowing it to post. A displayed badge could verify content.

- A tracking system for the origin of a video, image, or audio clip. Blockchain could play a role, and a company called Truepic has raised money to use it for this purpose.

- Watermarks could be placed on images verified as real or deepfakes. Farid said that one possibility is to add an invisible signature to images created with Google’s TensorFlow technology, which powers the most popular currently available deepfake generator.

The big question: Will tech companies implement such protections if they might be seen as infringing on free speech, a similar conundrum faced by social networking companies policing extremist content?

Go deeper: